10 min read

This article started with a conversation.

One of my engineering managers pointed out a troubling pattern. Teams continued to deliver even when builds failed or tests flaked, and they did so without pausing or showing any curiosity.

“The pipeline is failing, but no one’s looking at it.”

He noticed some engineers weren’t following their code after it went into production. They weren’t checking logs or using observability tools. Meanwhile, others followed through, checking metrics, monitoring logs, and validating behavior. Then he asked:

“We do performance reviews for individuals; why not for teams?”

And it struck me: we don’t, not in any meaningful, structured way. We hold individuals accountable for how they show up, but teams, the unit that delivers value, are too often assessed only by their outputs, not their behaviors.

This article isn’t a proposal. It’s a prompt.

A Reflection More Than a Framework

This isn’t a manager’s tool or a leadership scorecard. It’s a guide for teams looking to improve how they collaborate with purpose. It’s for delivery teams that value their habits just as much as their results.

Use it as a retro exercise. A quarterly reset. A mirror.

Why Team Reflection Matters

We already measure delivery performance. DORA. Flow. Developer Experience.

But those metrics don’t always answer:

- Are we doing what we said mattered , like observability and test coverage?

- Are we working as a team or as individuals executing in parallel?

- Do we hold each other accountable for delivering with integrity?

This is the gap: how teams work together. This guide helps fill it , not to replace metrics, but to deepen the story they tell.

What This Is (And Isn’t)

You might ask: “Don’t SAFe, SPACE, DORA, or Flow Metrics already do this?”

Yes and no. Those frameworks are valuable. But they answer different questions:

- DORA & Flow: How fast and stable is our delivery?

- DX Core 4 & SPACE: How do developers feel about their work environment?

- Maturity Models: How fully have we adopted Agile practices?

- For organizations implementing SAFe, SAFe’s Measure and Grow evaluate enterprise agility in dimensions such as team agility, product delivery, and lean leadership.

What they don’t always show is:

- Are we skipping discipline under pressure?

- Do we collaborate across roles or operate in silos?

- Are we shipping through red builds and hoping for the best?

But the question stuck with me:

Shouldn’t we do the same for teams if we hold individuals accountable for how they show up?

What follows is a framework and a conversation starter, not a mandate. It’s just something to consider because, in many organizations, teams are where the real impact (or dysfunction) lives.

Suggested Team Reflection Dimensions

You don’t need to use all twelve categories. Start with the ones that matter most to your team, or define your own. This section is designed to help teams reflect on how they work together, not just what they deliver.

But before diving into individual dimensions, start with this simple but powerful check-in.

Would We Consider Ourselves Underperforming, Performing, or High-Performing?

This question encourages self-awareness without any external judgment. The team should decide together: no scorecards, no leadership evaluations, just a shared reflection on your experience as a delivery team.

From there, explore:

- What makes us feel that way?

What behaviors, habits, or examples support our self-assessment? - What should we keep doing?

What’s already working well that we want to protect or double down on? - What should we stop doing?

What’s causing friction, waste, or misalignment? - What should we start doing?

What’s missing that could improve how we operate?

This discussion often surfaces more actionable insight than metrics alone. It grounds the assessment in the team’s shared experience and sets the tone for improvement, not judgment.

A Flexible Self-Evaluation Scorecard

While this isn’t designed as a top-down performance tool, teams can use it as a self-evaluation scorecard if they choose. The reflection tables that follow can help teams:

- Identify where they align today: underperforming, performing, or high-performing.

- Recognize the dimensions where they accelerate and where they have room to improve.

- Prioritize the changes that will have the greatest impact on how they deliver.

No two teams will see the same patterns, and that’s the point. Use the guidance below not as a measurement of worth but as a compass to help your team navigate toward better outcomes together.

The 12-Dimension Agile Team Performance Assessment Framework

These dimensions serve as valuable tools for self-assessments, retrospectives, or leadership reviews, offering a framework to evaluate not just what teams deliver, but how effectively they perform.

- Collaboration & Communication

- Planning & Execution

- Data-Driven Improvement

- Code Quality & Technical Health

- Observability & Operational Readiness

- Flow & Efficiency

- Customer & Business Focus

- Role Clarity & Balanced Decision-Making

- Business-Technical Integration

- Engineering Discipline & Best Practice Adoption

- Accountability & Delivery Integrity

- Capabilities & Adaptability

These dimensions help teams focus not just on what they’re delivering but also on how their work contributes to long-term success.

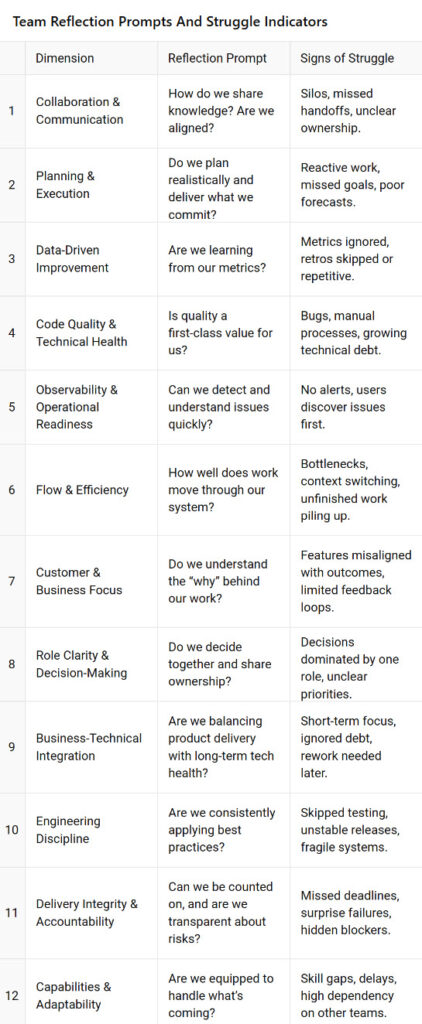

Quick Reflection Table

This simple table is a great way to start conversations. It works well for retrospectives, quarterly check-ins, or when something feels off. Each category includes a key question and signs that may indicate your team is facing challenges in that area.

Collaboration & Communication

Reflection Prompt: How do we share knowledge? Are we aligned?

Signs of struggle: Silos, missed handoffs, unclear ownership.

Planning & Execution

Reflection Prompt: Do we plan realistically and deliver what we commit?

Signs of struggle: Reactive work, missed goals, poor forecasts.

Data-Driven Improvement

Reflection Prompt: Are we learning from our metrics?

Signs of struggle: Metrics ignored, retros skipped or repetitive.

Code Quality & Technical Health

Reflection Prompt: Is quality a first-class value for us?

Signs of struggle: Bugs, manual processes, growing technical debt.

Observability & Operational Readiness

Reflection Prompt: Can we detect and understand issues quickly?

Signs of struggle: No alerts, users discover issues first.

Flow & Efficiency

Reflection Prompt: How well does work move through our system?

Signs of struggle: Bottlenecks, context switching, unfinished work piling up.

Customer & Business Focus

Reflection Prompt: Do we understand the “why” behind our work?

Signs of struggle: Features misaligned with outcomes, limited feedback loops.

Role Clarity & Decision-Making

Reflection Prompt: Do we decide together and share ownership?

Signs of struggle: Decisions dominated by one role, unclear priorities.

Business-Technical Integration

Reflection Prompt: Are we balancing product delivery with long-term tech health?

Signs of struggle: Short-term focus, ignored debt, rework needed later.

Engineering Discipline

Reflection Prompt: Are we consistently applying best practices?

Signs of struggle: Skipped testing, unstable releases, fragile systems.

Delivery Integrity & Accountability

Reflection Prompt: Can we be counted on, and are we transparent about risks?

Signs of struggle: Missed deadlines, surprise failures, hidden blockers.

Capabilities & Adaptability

Reflection Prompt: Are we equipped to handle what’s coming?

Signs of struggle: Skill gaps, delays, high dependency on other teams.

How this appears in table format:

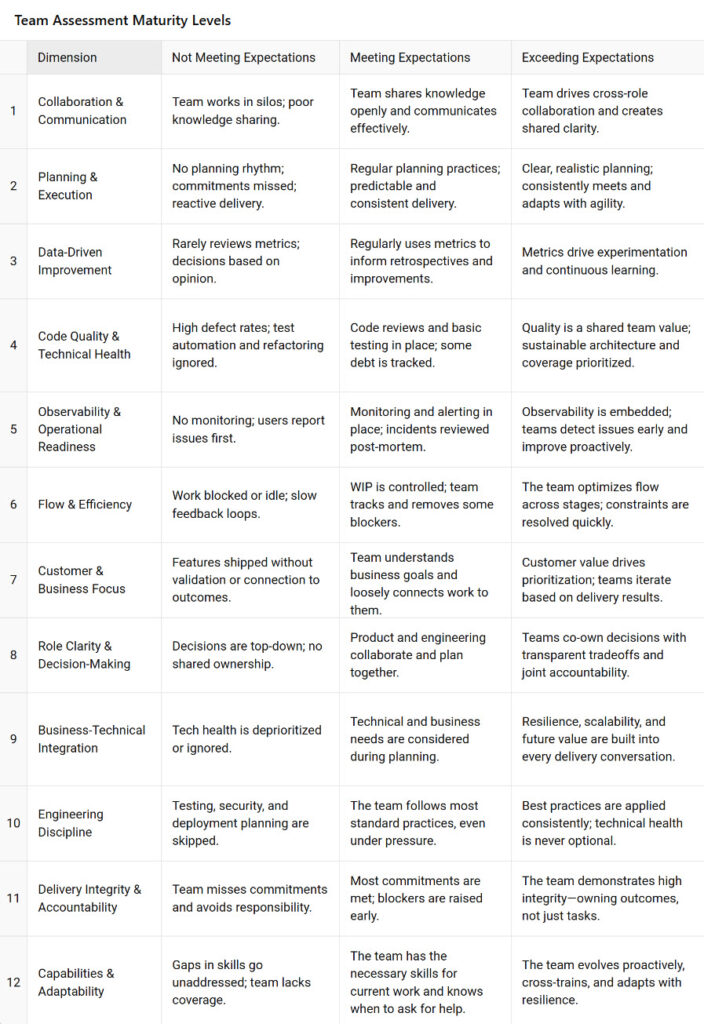

Detailed Assessment Reference

For teams looking for more detail, the next section breaks down each reflection category. It explains what “Not Meeting Expectations,” “Meeting Expectations,” and “Exceeding Expectations” look like in practice.

Collaboration & Communication

- Not Meeting Expectations: Team works in silos; poor knowledge sharing.

- Meeting Expectations: Team shares knowledge openly and communicates effectively.

- Exceeding Expectations: Team drives cross-role collaboration and creates shared clarity.

Planning & Execution

- Not Meeting Expectations: No planning rhythm; commitments missed; reactive delivery.

- Meeting Expectations: Regular planning practices; predictable and consistent delivery.

- Exceeding Expectations: Clear, realistic planning; consistently meets and adapts with agility.

Data-Driven Improvement

- Not Meeting Expectations: Rarely reviews metrics; decisions based on opinion.

- Meeting Expectations: Regularly uses metrics to inform retrospectives and improvements.

- Exceeding Expectations: Metrics drive experimentation and continuous learning.

Code Quality & Technical Health

- Not Meeting Expectations: High defect rates; test automation and refactoring ignored.

- Meeting Expectations: Code reviews and basic testing in place; some debt is tracked.

- Exceeding Expectations: Quality is a shared team value; sustainable architecture and coverage prioritized.

Observability & Operational Readiness

- Not Meeting Expectations: No monitoring; users report issues first.

- Meeting Expectations: Monitoring and alerting in place; incidents reviewed post-mortem.

- Exceeding Expectations: Observability is embedded; teams detect issues early and improve proactively.

Flow & Efficiency

- Not Meeting Expectations: Work blocked or idle; slow feedback loops.

- Meeting Expectations: WIP is controlled; team tracks and removes some blockers.

- Exceeding Expectations: The team optimizes flow across stages; constraints are resolved quickly.

Customer & Business Focus

- Not Meeting Expectations: Features shipped without validation or connection to outcomes.

- Meeting Expectations: Team understands business goals and loosely connects work to them.

- Exceeding Expectations: Customer value drives prioritization; teams iterate based on delivery results.

Role Clarity & Decision-Making

- Not Meeting Expectations: Decisions are top-down; no shared ownership.

- Meeting Expectations: Product and engineering collaborate and plan together.

- Exceeding Expectations: Teams co-own decisions with transparent tradeoffs and joint accountability.

Business-Technical Integration

- Not Meeting Expectations: Tech health is deprioritized or ignored.

- Meeting Expectations: Technical and business needs are considered during planning.

- Exceeding Expectations: Resilience, scalability, and future value are built into every delivery conversation.

Engineering Discipline

- Not Meeting Expectations: Testing, security, and deployment planning are skipped.

- Meeting Expectations: The team follows most standard practices, even under pressure.

- Exceeding Expectations: Best practices are applied consistently; technical health is never optional.

Delivery Integrity & Accountability

- Not Meeting Expectations: Team misses commitments and avoids responsibility.

- Meeting Expectations: Most commitments are met; blockers are raised early.

- Exceeding Expectations: The team demonstrates high integrity and owns outcomes, not just tasks.

Capabilities & Adaptability

- Not Meeting Expectations: Gaps in skills go unaddressed; team lacks coverage.

- Meeting Expectations: The team has the necessary skills for current work and knows when to ask for help.

- Exceeding Expectations: The team evolves proactively, cross-trains, and adapts with resilience.

How this appears in table format:

These tools are meant to start conversations. Use them as a guide, not a strict scoring system, and revisit them as your team grows and changes. High-performing teams regularly reflect as part of their routine, not just occasionally. These tools are designed to help you build that habit intentionally.

How to Use This and Who Should Be Involved

This framework isn’t a performance review. It’s a reflection tool designed for teams to assess themselves, clarify their goals, and identify areas for growth.

Here’s how to make it work:

1. Run It as a Team

Use this framework during retrospectives, quarterly check-ins, or after a major delivery milestone. Let the team lead the conversation. They’re closest to the work and best equipped to evaluate how things feel.

The goal isn’t to assign grades. It’s to pause, align, and ask: How are we doing?

2. Make It Yours

There’s no need to use all twelve dimensions. Start with the ones that resonate most. You can rename them, add new ones, or redefine what “exceeding expectations” look like in your context.

The more it reflects your team’s values and language, the more powerful the reflection becomes.

3. Use Metrics to Support the Story, Not Replace It

Delivery data like DORA, Flow Metrics, or Developer Experience scores can add perspective. But they should inform, not replace the conversation. Numbers are helpful, but they don’t speak for how it feels to deliver work together. Let data enrich the dialogue, not dictate it.

4. Invite Broader Perspectives

Some teams can gather anonymous 360° feedback from stakeholders or adjacent teams surfacing blind spots and validate internal perceptions.

Agile Coaches or Delivery Leads can also bring an outside-in view, helping the team see patterns over time, connecting the dots across metrics and behaviors, and guiding deeper reflection. Their role isn’t to evaluate but to support growth.

5. Let the Team Decide Where They Stand

As part of the assessment, ask the team:

Would we consider ourselves underperforming, performing, or high-performing?Then explore:

- What makes us feel that way?

- What should we keep doing?

- What should we stop doing?

- What should we start doing?

These questions give the framework meaning. It turns observation into insight and insight into action.

This Is About Ownership, Not Oversight

This reflection guide and its 12 dimensions can serve as a performance management tool, but I strongly recommend using it as a check-in resource for teams. It’s designed to build trust, encourage honest conversations, and offer a clear snapshot of the team’s current state. When used intentionally, it enhances team cohesion and strengthens overall capability. For leaders, focusing on recurring themes rather than individual scores reveals valuable patterns that can inform coaching efforts rather than impose control. Adopting it is in your hands and your team’s.

Final Thoughts

This all started with a conversation and a question: “We do performance reviews for individuals, but what about teams?” If we care about how individuals perform, shouldn’t we also care about how teams perform together?

High-performing teams don’t happen by accident. They succeed by focusing on both what they deliver and how they deliver it.

High-performing teams don’t just meet deadlines, they adapt, assess themselves, and improve together. This framework provides them with a starting point to make that happen.

Related Articles

If you found this helpful, here are a few related articles that explore the thinking behind this framework:

- From Feature Factory to Purpose-Driven Development: Why Anticipated Outcomes Are Non-Negotiable

- Decoding the Metrics Maze: How Platform Marketing Fuels Confusion Between SEI, VSM, and Metrics

- Navigating the Digital Product Workflow Metrics Landscape: From DORA to Comprehensive Value Stream Management Platform Solutions

Poking Holes

I invite your perspective on my posts. What are your thoughts?.

Let’s talk: [email protected]