6 min read

A Guide for Legacy-Minded Leaders on Using Metrics to Drive the Right Behavior

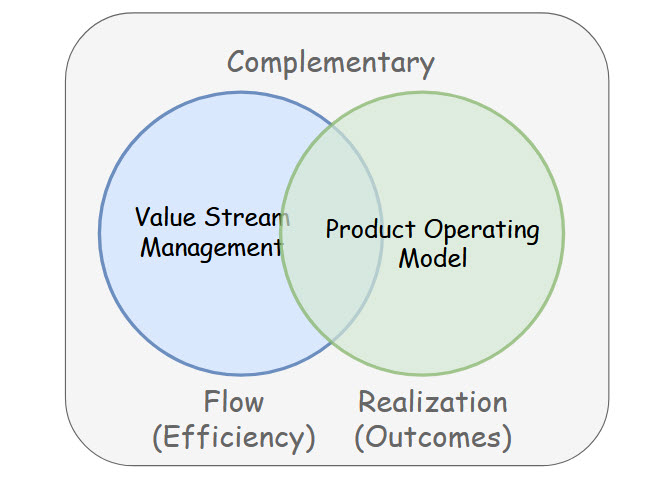

From Outputs to Outcomes

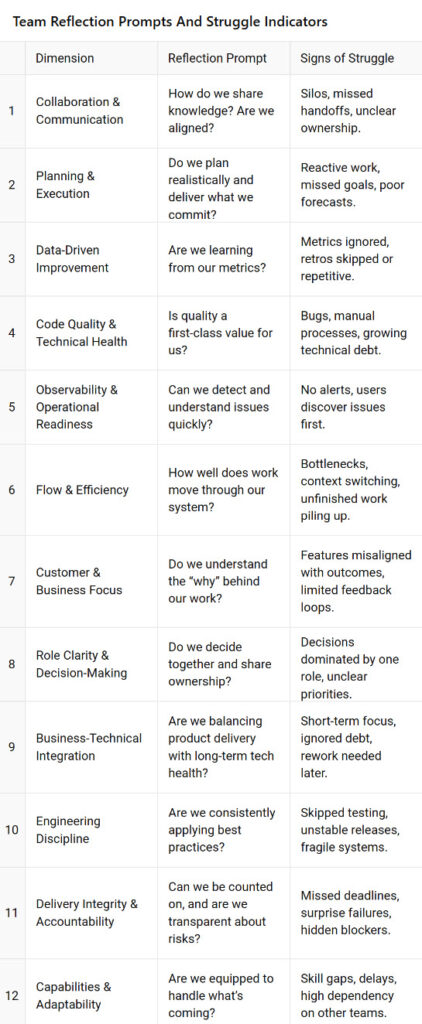

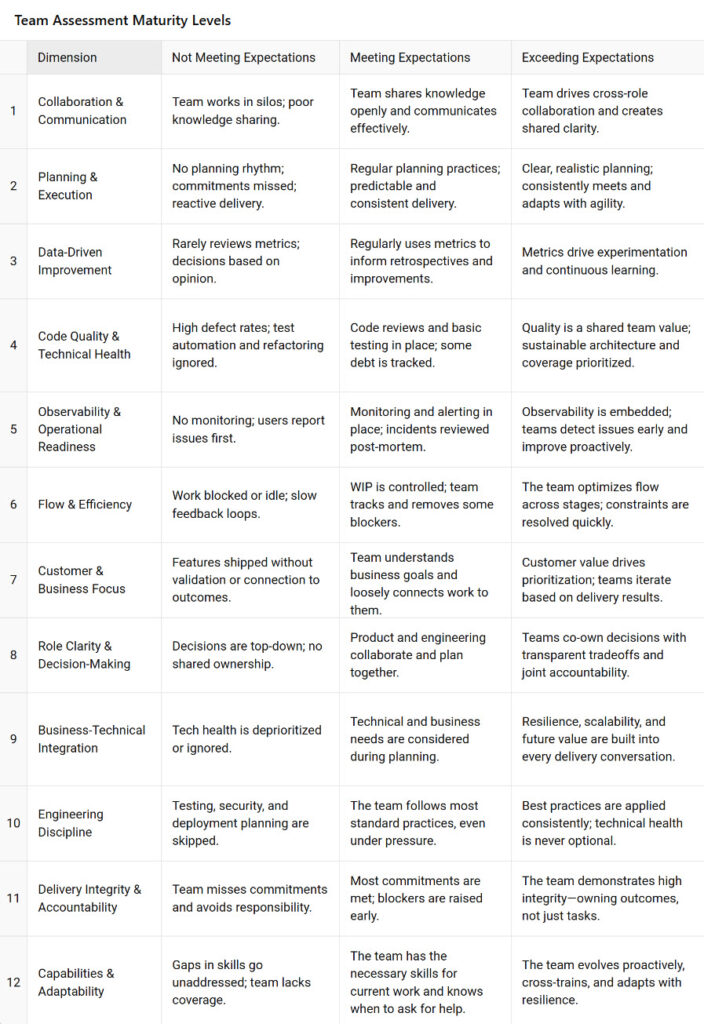

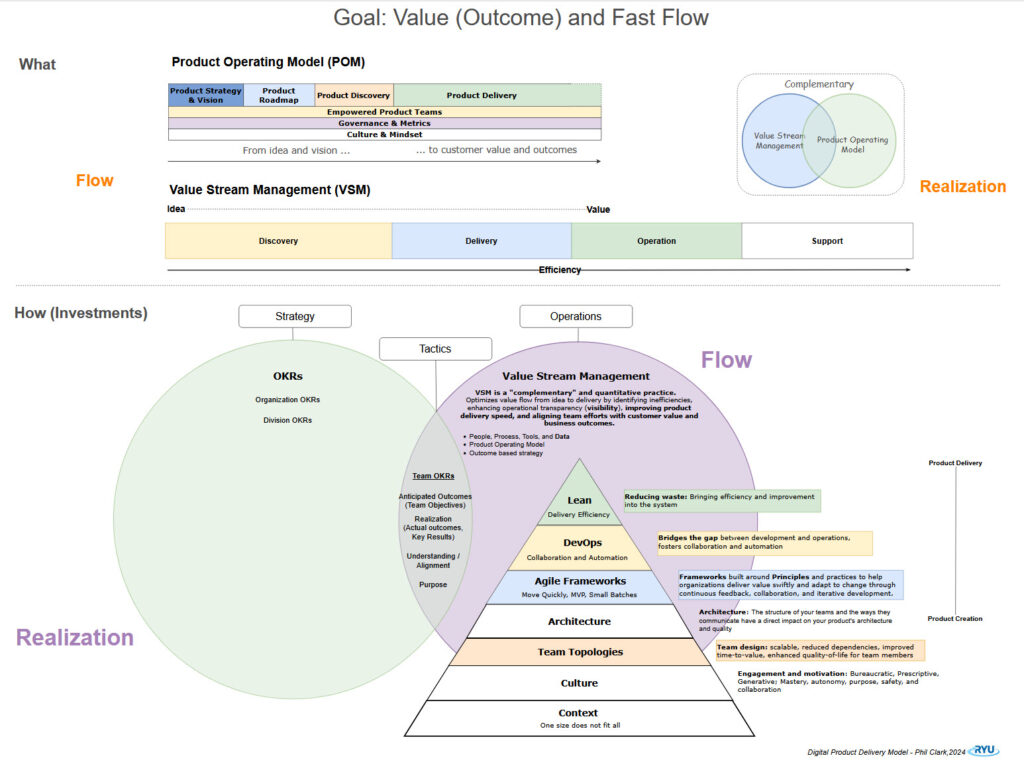

A senior executive recently asked a VP of Engineering and the Head of Architecture for industry benchmarks on Flow Metrics. At first, this seemed like a positive step, shifting the focus from individual output to team-level outcomes. However, the purpose of the request raised concerns. These benchmarks were intended to evaluate engineering managers’ performance for annual reviews and possibly bonuses.

That’s a problem. Using system-level metrics to judge individual performance is a common mistake. It might look good on paper but often hides deeper issues. This approach is for senior leaders adopting team-level metrics who want to use them effectively. You’ve chosen better metrics. Now, let’s make sure they work as intended. It risks turning system-level signals into personal scorecards, creating the dysfunction these metrics are meant to reveal and improve. Using metrics this way negates their value and invites gaming over genuine improvement.

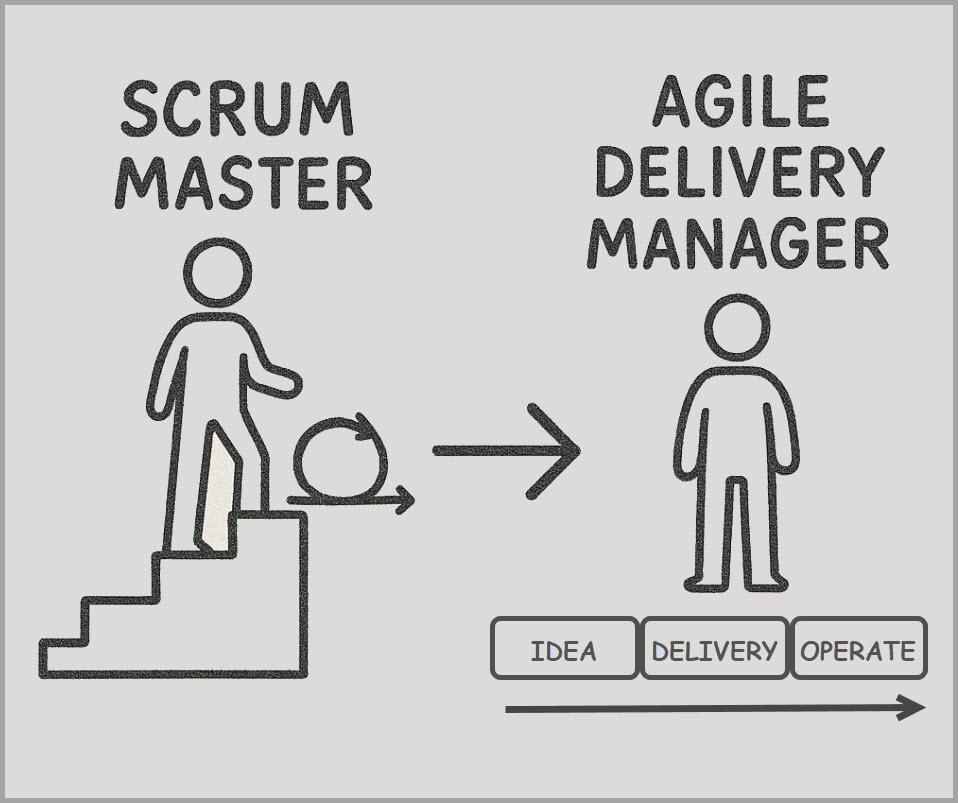

To clarify, the executive’s team structure follows the Engineering Manager (EM) model, where EMs are responsible for the performance of the delivery team. In contrast, I support an alternative approach with autonomous teams built around team topologies. These teams include all the roles needed to deliver value, without a manager embedded in the team. These are two common but very different models of team structure and performance evaluation.

This isn’t the first time I’ve seen senior leaders misuse qualitative metrics, and it likely won’t be the last. So I asked myself: Now that more leaders have agreed to adopt the right metrics, do they know how to use them responsibly?

I will admit that I was frustrated to learn of this request, but the event inspired me to create a guide for leaders, especially those used to traditional, output-focused models who are new to Flow Metrics and team-level measurement. You’ve picked the right metrics; now comes the challenge: using them effectively without causing harm.

How to Use Team Metrics Without Breaking Trust or the System

1. Start by inviting teams into the process

- Don’t tell them, “Flow Efficiency must go up 10%.”

- Ask instead: “Here’s what the data shows. What’s behind this? What could we try?”

Why: Positive intent. Teams already want to improve. They’ll take ownership if you bring them into the process and give them time and space to act. Top-down mandates might push short-term results, but they usually kill long-term improvement.

2. Understand inputs vs. outputs

- Output metrics (like Flow Time, PR throughput, or change failure rate) are results. You don’t control them directly.

- Input metrics (like review turnaround time or number of unplanned interruptions) reflect behaviors teams can change.

Why: If you set targets on outputs, teams won’t know what to do. That’s when you get gaming or frustration. Input metrics give teams something they can improve. That’s how you get real system-level change.

I’ve been saying this for a while, and I like how Abi Noda and the DX team explain it: input vs. output metrics. It’s the same thing as leading vs. lagging indicators. Focus on what teams can influence, not just what you want to see improve.

3. Don’t turn metrics into targets

When a measure becomes a target, it stops being useful.

- Don’t turn system health metrics into KPIs.

- If people feel judged by a number, they’ll focus on making the number look good instead of fixing the system.

Why: You’ll get shallow progress, not real change. And you won’t know the difference because the data will look better. The cost? Lost trust, lower morale, and bad decisions.

4. Always add context

- Depending on the situation, a 10-day Flow Time might be great or terrible.

- Ask about the team’s product, the architecture, the kind of work they do, and how much unplanned work they handle.

Why: Numbers without context are misleading. They don’t tell the story. If you act on them without understanding what’s behind them, you’ll create the wrong incentives and fix the bad things.

5. Set targets the right way

- Not every metric needs a goal.

- Some should trend up; others should stay stable.

- Don’t use blanket rules like “improve everything by 10%.”

Why: Metrics behave differently. Some take months to move. Others can be gamed easily. Think about what makes sense for that metric in that context. Real improvement takes time; chasing the wrong number can do more harm than good.

6. Tie metrics back to outcomes and the business

- Don’t just say, “Flow Efficiency improved.” Ask, what changed?

- Did we deliver faster?

- Did we reduce the cost of delay?

- Did we create customer value?

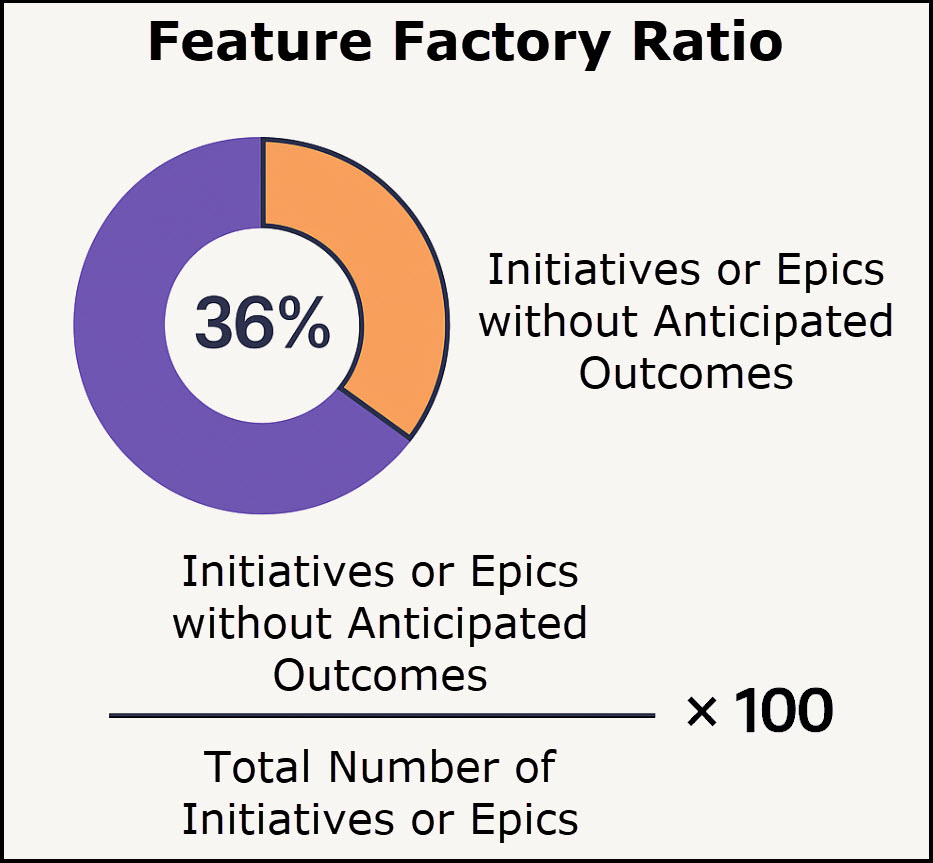

If you’ve read my other posts, I recommend tying every epic and initiative to an anticipated outcome. That mindset also applies to metrics. Don’t just look at the number. Ask what value it represents.

Also, it’s critical that teams use metrics to identify their bottleneck. That’s the key. Real flow improvement comes from fixing the biggest constraint.

If you improve something downstream of the bottleneck, you’re not improving flow. You’re just making things look better in one part of the system. It’s localized and often a wasted effort.

Why: If the goal is better business outcomes, you must connect what the team does with how it moves the needle. Metrics are just the starting point for that conversation.

7. Don’t track too many things

- Stick to 3-5 input metrics at a time.

- Make these part of retrospectives, not just leadership dashboards.

Why: Focus drives improvement. If everything is a priority, nothing is. Too many metrics dilute the team’s energy. Let them pick the right ones and go deep.

8. Build a feedback loop that works

- Metrics are most useful when teams review them regularly.

- Make time to reflect and adapt.

We’re still experimenting with what cadence works best. Right now, monthly retrospectives are the minimum. That gives teams short feedback loops to adjust their improvement efforts. A quarterly check-in is still helpful for zooming out. Both are valuable. We’re testing these cycles, but they give teams enough time to try, reflect, and adapt.

Why: Improvement requires learning. Dashboards don’t improve teams. Feedback does. Create a rhythm where teams can test ideas, measure progress, and shift direction.

A Word of Caution About Using Metrics for Performance Reviews

Some leaders ask, “Can I use Flow Metrics to evaluate my engineering managers?” You can, but it’s risky.

Flow Metrics tell you how the system is performing. They’re not designed to evaluate individuals. If you tie them to bonuses or promotions, you’ll likely get:

- Teams gaming the data

- Managers focus on optics, not problems

- Reduced trust and openness

Why: When you make metrics part of a performance review, people stop using them for improvement. They stop learning. They play it safe. That hurts the team and the system.

Here’s what you can do instead:

- Use metrics to guide coaching conversations, not to judge.

- Evaluate managers based on how they improve the system and support their teams.

- Reward experimentation, transparency, and alignment to business value.

Performance is bigger than one number. Metrics help tell the story, but they aren’t the story.

Sidebar: What if Gamification Still Improves the Metric?

I’ve heard some folks say, “I’m okay with gamification; if the number gets better, the team gets better.”

I get where they’re coming from. Sometimes, gamifying a number can move. But here’s the problem:

- It often hides the real issues.

- It encourages people to optimize for appearances, not outcomes.

- It breaks the feedback loop you need to find the real constraints.

- It builds a culture of avoidance instead of learning.

So, while gamification might improve the score, it doesn’t constantly improve the system and rarely as efficiently as intentional, transparent work on the problem.

If the goal is long-term performance, trust the process. Let teams learn from the data. Don’t let the number become the mission.

Poking Holes

I invite your perspective on my posts. What are your thoughts?.

Let’s talk: [email protected]